GitHub - moritzhambach/CPU-vs-GPU-benchmark-on-MNIST: compare training duration of CNN with CPU (i7 8550U) vs GPU (mx150) with CUDA depending on batch size

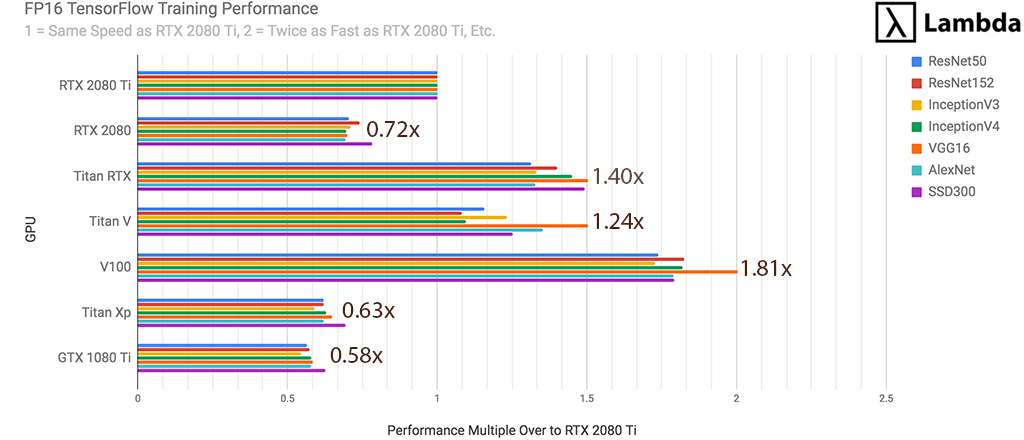

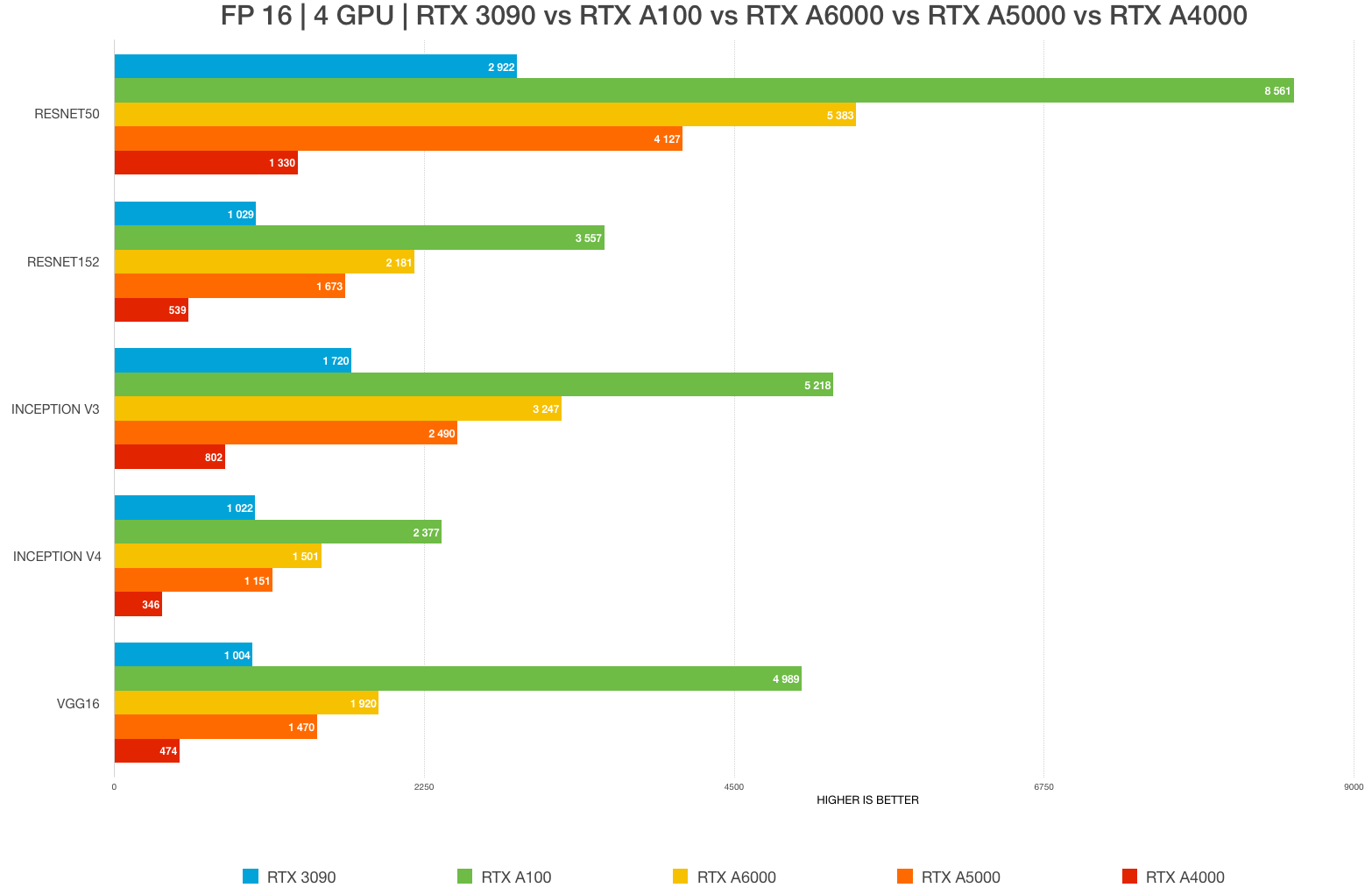

Best GPU for AI/ML, deep learning, data science in 2023: RTX 4090 vs. 3090 vs. RTX 3080 Ti vs A6000 vs A5000 vs A100 benchmarks (FP32, FP16) – Updated – | BIZON

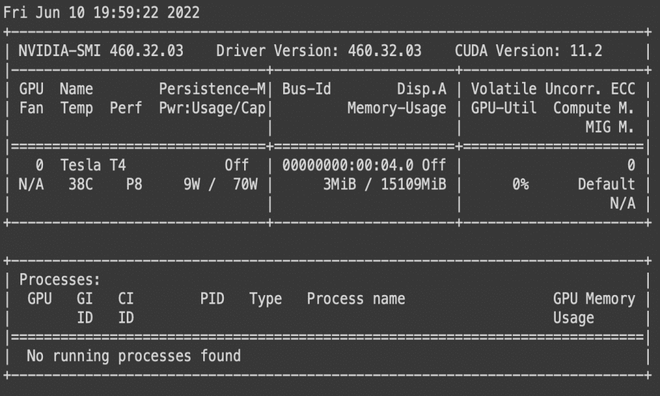

GitHub - miladfa7/Install-Tensorflow-GPU-2.1.0-on-Linux-Ubuntu-18.04: Easily Install Tensorflow-GPU 2.1.0 on Linux Ubuntu 18.04 -Cuda 10 & Cudnn 7.6.5 | Download package dependencies with direct link